2.0 KiB

2.0 KiB

spark安装

- 将

spark-3.3.4-bin-hadoop3-scala2.13.tgz上传到每台机器的/tmp

# 解压

ssh_root.sh tar -zxf /tmp/spark-3.3.4-bin-hadoop3-scala2.13.tgz -C /usr/local

- 修改 spark 的目录所有者为hadoop

ssh_root.sh chown -R hadoop:hadoop /usr/local/spark-3.3.4-bin-hadoop3-scala2.13/

- 添加一个软连接

ssh_root.sh ln -s /usr/local/spark-3.3.4-bin-hadoop3-scala2.13 /usr/local/spark

spark 配置

spark-env.sh、workers

# 先重命名一下

cd /usr/local/spark/conf

mv spark-env.sh.template spark-env.sh

mv workers.template workers

# 编辑 spark-env.sh

vim spark-env.sh

# 在文件末尾添加:

export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop

export SPARK_WORKER_CORES=2

export SPARK_WORKER_MEMORY=1G

export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=nn1:2181,nn2:2181,nn3:2181 -Dspark.deploy.zookeeper.dir=/spark3"

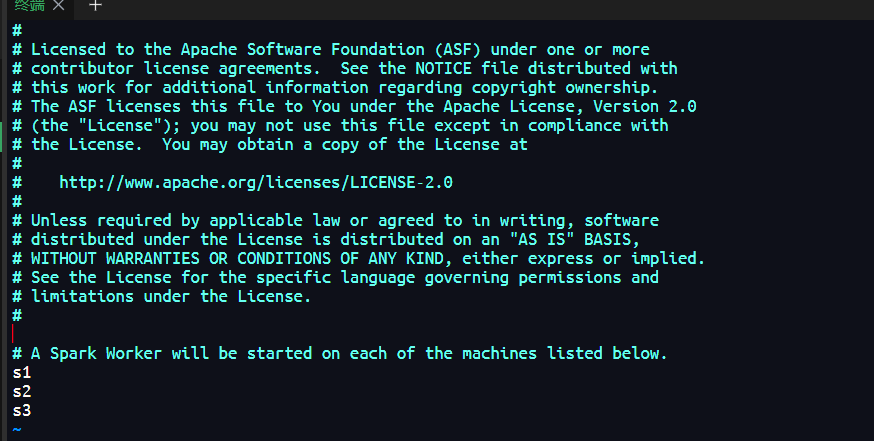

# 编辑 workers

vim workers

# 分发到其他主机

scp_all.sh /usr/local/spark/conf/spark-env.sh /usr/local/spark/conf/

scp_all.sh /usr/local/spark/conf/workers /usr/local/spark/conf/

- 环境变量配置

# 在/etc/profile.d/myEnv.sh中进行配置

echo 'export SPARK_HOME=/usr/local/spark' >> /etc/profile.d/myEnv.sh

echo 'export PATH=$PATH:$SPARK_HOME/bin' >> /etc/profile.d/myEnv.sh

echo 'export PATH=$PATH:$SPARK_HOME/sbin' >> /etc/profile.d/myEnv.sh

# 分发到其他主机

scp_all.sh /etc/profile.d/myEnv.sh /etc/profile.d

# 在5台主机分别执行

source /etc/profile

执行任务

spark-submit --master spark://nn1:7077,nn2:7077 \

--executor-cores 2 \

--executor-memory 1G \

--total-executor-cores 6 \

--class org.apache.spark.examples.SparkPi \

/usr/local/spark/examples/jars/spark-examples_2.13-3.3.4.jar \

10000

#!/bin/bash

ssh_all_zk.sh ${ZOOKEEPER_HOME}/bin/zkServer.sh start

${HADOOP_HOME}/sbin/start-all.sh